AI may have Watch RK Prime 29 Onlinesexist tendencies. But, sorry, the problem is still us humans.

Amazon recently scrapped an employee recruiting algorithm plagued with problems, according to a report from Reuters. Ultimately, the applicant screening algorithm did not return relevant candidates, so Amazon canned the program. But in 2015, Amazon had a more worrisome issue with this AI: it was down-ranking women.

The algorithm was only ever used in trials, and engineers manually corrected for the problems with bias. However, the way the algorithm functioned, and the existence of the product itself, speaks to real problems about gender disparity in tech and non-tech roles, and the devaluation of perceived female work.

SEE ALSO: Welp. Turns out AI learns gender and race stereotypes from humans.Amazon created its recruiting AI to automatically return the best candidates out of a pool of applicant resumes. It discovered that the algorithm would down-rank resumes when it included the word "women's," and even two women's colleges. It would also give preference to resumes that contained what Reuters called "masculine language," or strong verbs like "executed" or "captured."

These patterns began to appear because the engineers trained their algorithm with past candidates' resumes submitted over the previous ten years. And lo and behold, most of the most attractive candidates were men. Essentially, the algorithm found evidence of gender disparity in technical roles, and optimized for it; it neutrally replicated a societal and endemic preference for men wrought from an educational system and cultural bias that encourages men and discourages women in the pursuit of STEM roles.

This Tweet is currently unavailable. It might be loading or has been removed.

This Tweet is currently unavailable. It might be loading or has been removed.

This Tweet is currently unavailable. It might be loading or has been removed.

Amazon emphasized in an email to Mashable that it scrapped the program because it was ultimately not returning relevant candidates; it dealt with the sexism problem early on, but the AI as a whole just didn't work that well.

However, the creation of hiring algorithms themselves — not just at Amazon, but across many companies — still speaks to another sort of gender bias: the devaluing of female-dominated Human Resources roles and skills.

According to the U.S. Department of Labor (via the workforce analytics provider company Visier), women occupy nearly three fourths of H.R. managerial roles. This is great news for overall female representation in the workplace. But the disparity exists thanks to another sort of gender bias.

There is a perception that H.R. jobs are feminine roles. The Globe and Mailwrites in its investigation of sexism and gender disparity in HR:

The perception of HR as a woman's profession persists. This image that it is people-based, soft and empathetic, and all about helping employees work through issues leaves it largely populated by women as the stereotypical nurturer. Even today, these "softer" skills are seen as less appealing – or intuitive – to men who may gravitate to perceived strategic, analytical roles, and away from employee relations.

Amazon and other companies that pursued AI integrations in hiring wanted to streamline the process, yes. But automating a people-based process shows a disregard for people-based skills that are less easy to mechanically reproduce, like intuition or rapport. Reuters reported that Amazon's AI identified attractive applicants through a five-star rating system, "much like shoppers rate products on Amazon"; who needs empathy when you've got five stars?

In Reuters' report, these companies suggest hiring AI as a compliment or supplement to more traditional methods, not an outright replacement. But the drive in the first place to automate a process by a female-dominated division shows the other side of the coin of the algorithm's preference for "male language"; where "executed" and "captured" verbs are subconsciously favored, "listened" or "provided" are shrugged off as inefficient.

The AI explosion is underway. That's easy to see in every evangelical smart phone or smart home presentation of just how much your robot can do for you, including Amazon's. But that means that society is opening itself up to create an even less inclusive world. A.I. can double down on discriminatory tendencies in the name of optimization, as we see with Amazon's recruiting A.I. (and others). And because A.I. is both built and led by humans (and often, mostly male humans) who may unintentionally transfer their unconscious sexist biases into business decisions, and the robots themselves.

So as our computers get smarter and permeate more areas of life and work, let's make sure to not lose what's human — alternately termed as what's "female" — along the way.

UPDATE 10/11/2018, 2:00 p.m PT:Amazon provided Mashable with the following statement about its recruiting algorithm.

“This was never used by Amazon recruiters to evaluate candidates.”

Topics Amazon Artificial Intelligence

Best earbuds deal: Save 20% on Soundcore Sport X20 by Anker

Best earbuds deal: Save 20% on Soundcore Sport X20 by Anker

The Song Stuck in My Head by Sadie Stein

The Song Stuck in My Head by Sadie Stein

What does Roe v. Wade being overturned mean to you?

What does Roe v. Wade being overturned mean to you?

On Shakespeare and Lice

On Shakespeare and Lice

SpaceX's Starlink will provide free satellite internet to families in Texas school district

SpaceX's Starlink will provide free satellite internet to families in Texas school district

Try First Thyself: In Praise of the Campus Dining Hall

Try First Thyself: In Praise of the Campus Dining Hall

Your Google homepage may look different on desktop soon

Your Google homepage may look different on desktop soon

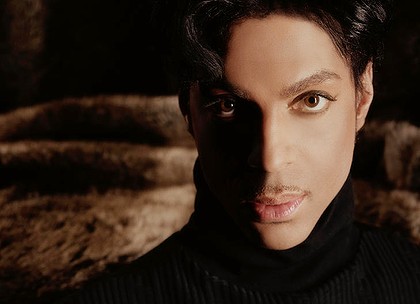

“Purple Elegy”: A Poem for Prince, by Rowan Ricardo Phillips

“Purple Elegy”: A Poem for Prince, by Rowan Ricardo Phillips

Razer Kishi V2 deal: Snag one for 50% off

Razer Kishi V2 deal: Snag one for 50% off

What does an upside

What does an upside

Today's Hurdle hints and answers for April 23, 2025

Today's Hurdle hints and answers for April 23, 2025

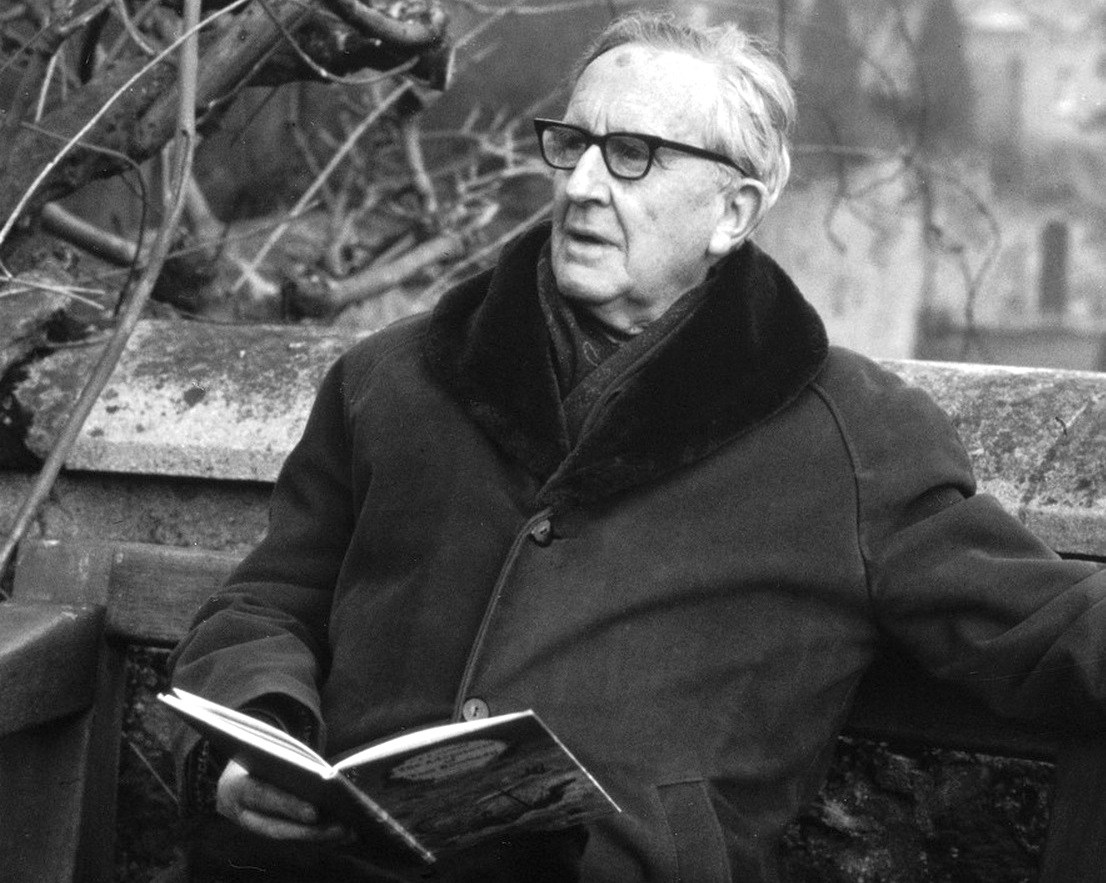

J. R. R. Tolkien, Lord of the Wireless

J. R. R. Tolkien, Lord of the Wireless

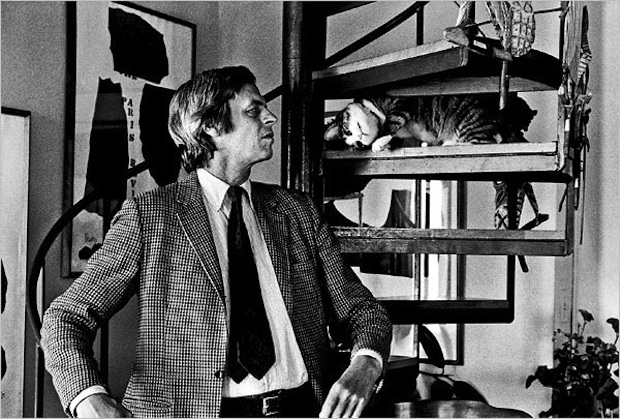

Fact: George Plimpton Did a Lot of Stuff (A Lot!)

Fact: George Plimpton Did a Lot of Stuff (A Lot!)

Lovehoney's sex toy Advent calendars: 24 days of fun, $302 off

Lovehoney's sex toy Advent calendars: 24 days of fun, $302 off

LA Galaxy vs. Tigres 2025 livestream: Watch Concacaf Champions Cup for free

LA Galaxy vs. Tigres 2025 livestream: Watch Concacaf Champions Cup for free

The Song Stuck in My Head by Sadie Stein

The Song Stuck in My Head by Sadie Stein

'V/H/S85' uses this Throbbing Gristle song to give you nightmares

'V/H/S85' uses this Throbbing Gristle song to give you nightmares

Exotic Pets of the Twenties and Thirties

Exotic Pets of the Twenties and Thirties

Best smartwatch deal: Get an Apple Watch Series 9 for 34% off

Best smartwatch deal: Get an Apple Watch Series 9 for 34% off

Pro wrestling stars are dunking on fellow wrestler, Kane, for his tweet on Roe v. Wade

Pro wrestling stars are dunking on fellow wrestler, Kane, for his tweet on Roe v. Wade

This old poem about plums has been turned into a hilariously nerdy memeMatt Lauer releases first public statement after sexual misconduct allegationsJimmy Kimmel comes for Roy Moore and his 'Christian values' with one perfect tweetHow Polycom is architecting the conference room of the futureBurger King unleashes Flamin' Hot Mac n' CheetosOmg 'Animal Crossing: Pocket Camp' is getting ChristmasTwitter Lite app launches in Asia, Latin America, Africa, and moreGoogle is being sued for allegedly planting secret cookies on 5.4 million iPhone usersSuper confident GM says its driverless cars will hit city streets by 2019Geoffrey Rush faces allegations of 'inappropriate behaviour'China is deleting posts about a kindergarten allegedly abusing its toddlersApple will go red for World AIDS DayI am the epitome of a male feminist and here's my secretAmazing new photo of Jupiter makes the planet look like a paintingAlyssa Milano vs. Ajit Pai is the net neutrality fight we need right nowBumble's popPikachu gets made ambassador for Osaka, by Japan foreign ministerApple and Stanford team up for app that looks for irregular heart rhythmsPikachu gets made ambassador for Osaka, by Japan foreign minister2018 will be the year cinema starts responding to the Trump election Durex is recruiting condom testers in the UK Stephen King tweets his 'Pet Sematary: Bloodlines' review Urban Dictionary names are going viral across the internet iPhone 15 Pro can record spatial Vision Pro videos Piero di Cosimo Painted the Dark Side of the Renaissance Doctors approve DIY diabetes treatment systems Apple Watch Series 9 and Ultra 2: Hands on with Double Tap Apple introduces new iCloud+ plans with 6TB and 12TB of storage W. H. Auden‘s Undergraduate Syllabus: 6,000 Pages of Reading Graceland Too: Saying Goodbye to An Eccentric’s Elvis Shrine A24 is selling the freaky hand from 'Talk to Me' Apple's most useless dongle ever costs $29 Shocked monkey in a very awkward position wins comedy wildlife photo prize Patricia Highsmith on Murder, Murderers, and Morality Reading the First American Novel, Published 226 Years Ago 'The Other Black Girl' review: Part satire, part horror, all fun Tomi Ungerer on Drawing, Politics, and Pushing the Envelope Wordle today: Here's the answer and hints for September 13 Windows on the World: The View from Himeji City, Japan Can TikTok's algorithm tell when you’ve had your heart broken?

2.1409s , 8226.8046875 kb

Copyright © 2025 Powered by 【Watch RK Prime 29 Online】,Wisdom Convergence Information Network